Communication Protocol

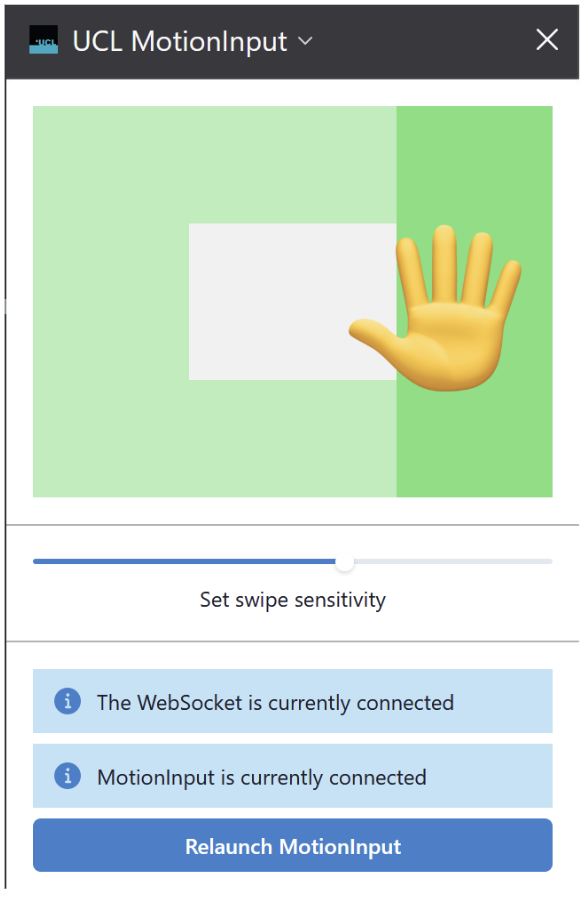

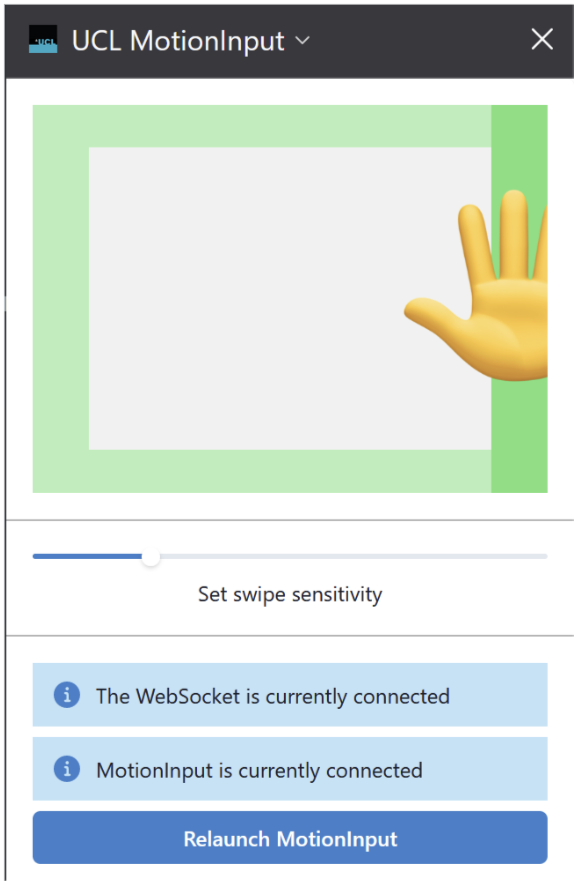

Enabling bidirectional communication between the WebExtension frontend and the MotionInput backend is an essential feature of our solution. The frontend needs to continually receive updates from the backend about things such as the hand positions, gesture sensitivity, direction moved, hand orientation etc., while the backend needs to be able to receive any config changes made from the WebExtension frontend.

Since web-based technologies are sandboxed and very restrictive in terms of access to the host operating system, we cannot make use of standard features such as lock files, process pipes, and other such synchronization primitives. To get around this, as mentioned in our system design page, we developed a very lightweight intermediary server that sits in-between the frontend and backend and communicates with platform-specific messaging protocols: ZeroMQ for the backend, and WebSockets for the frontend.

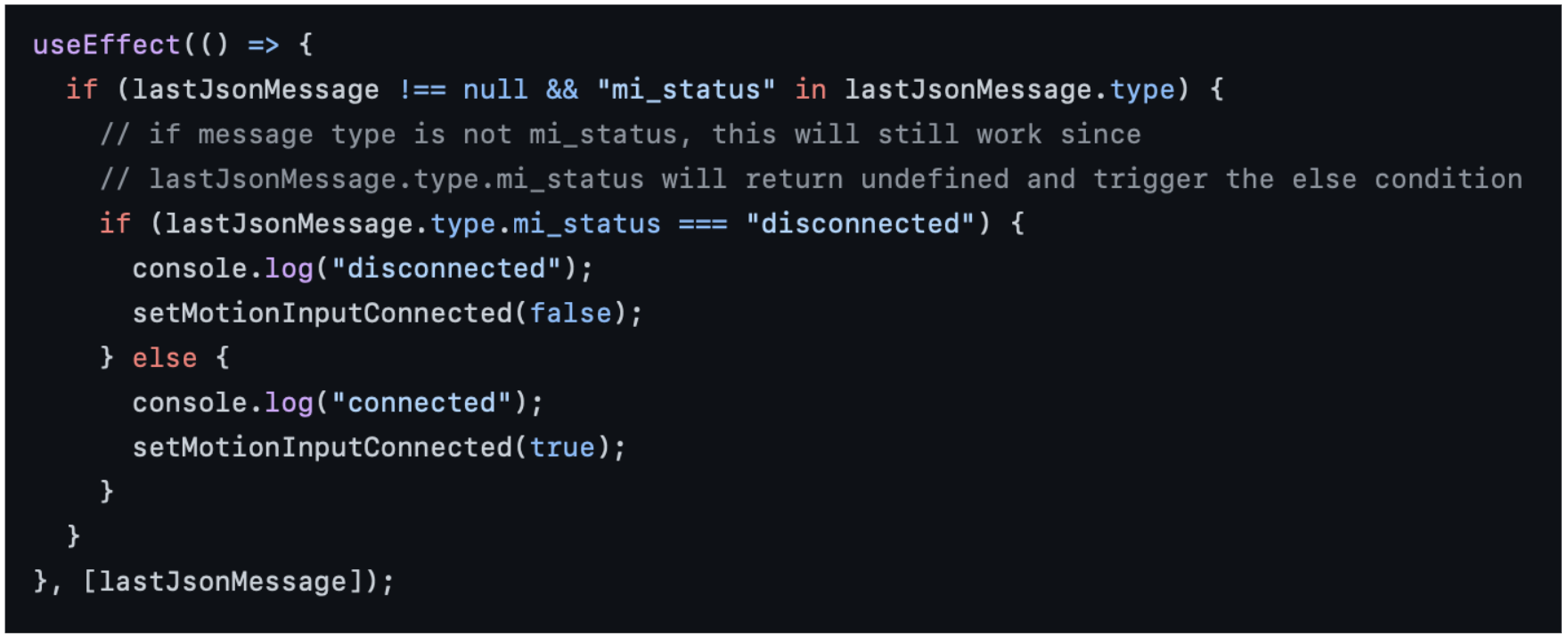

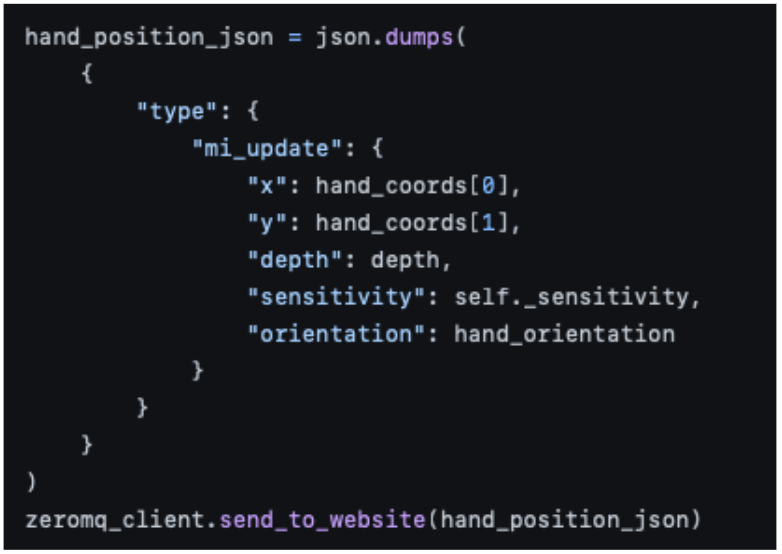

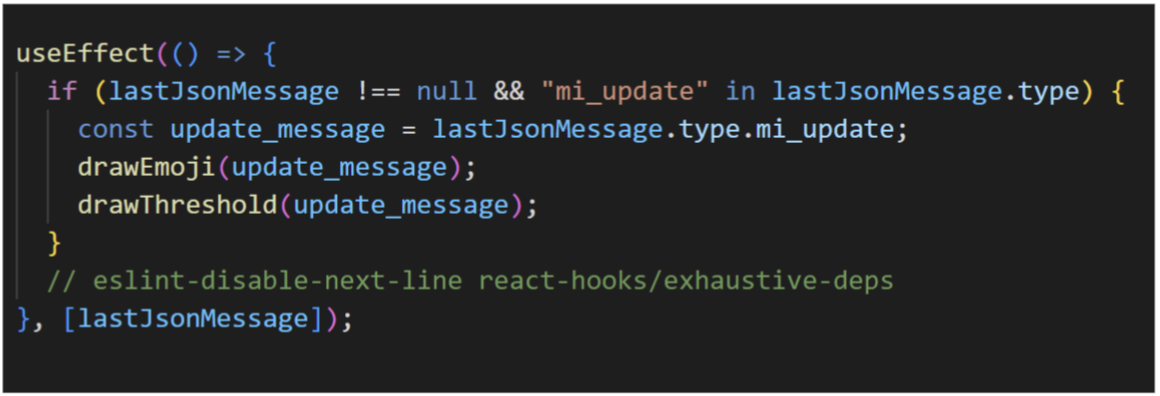

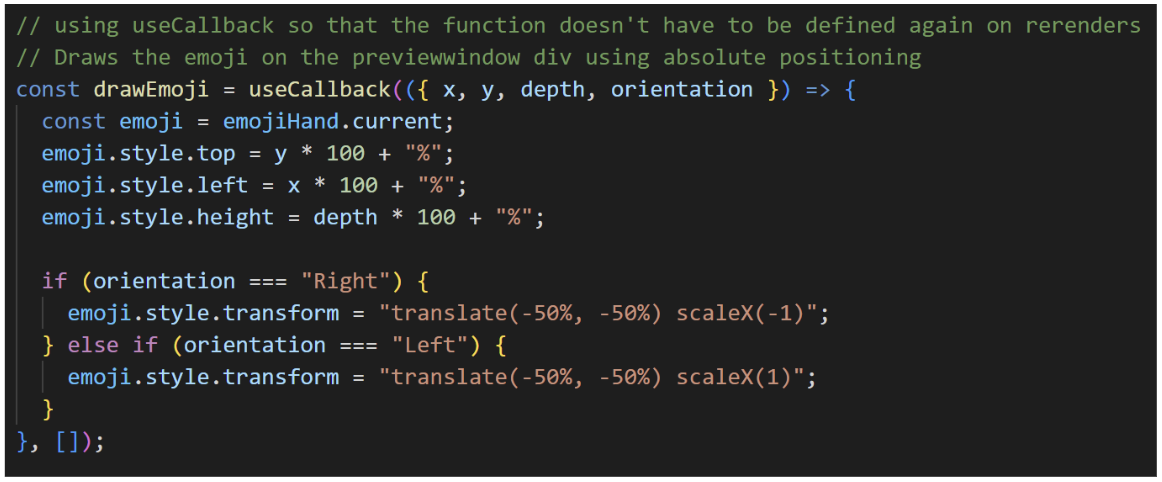

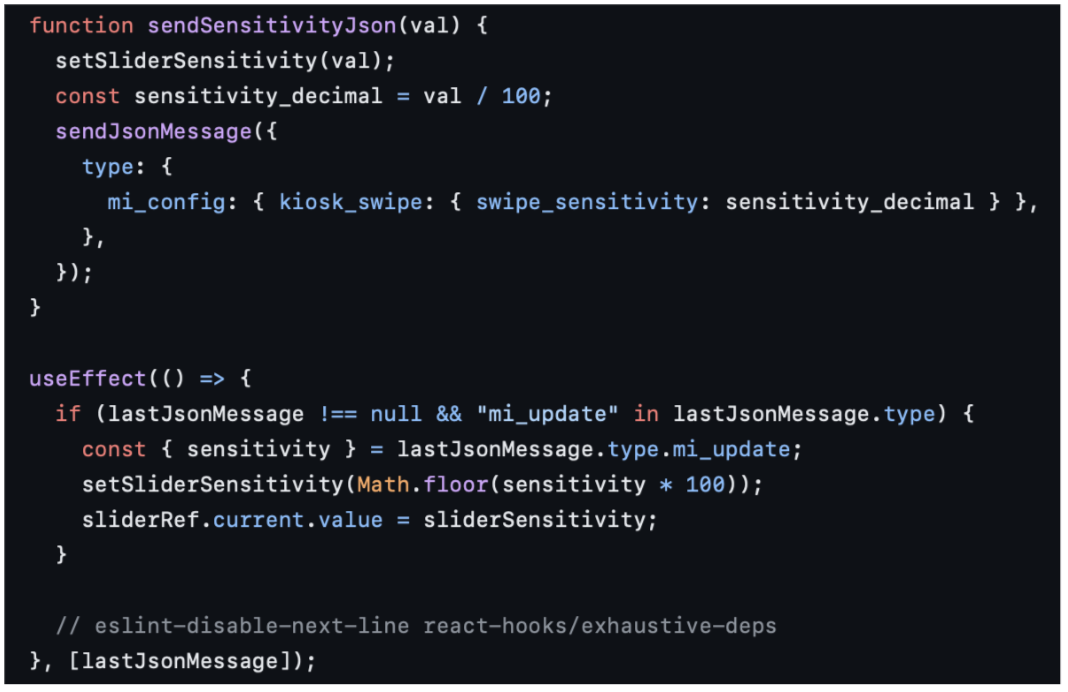

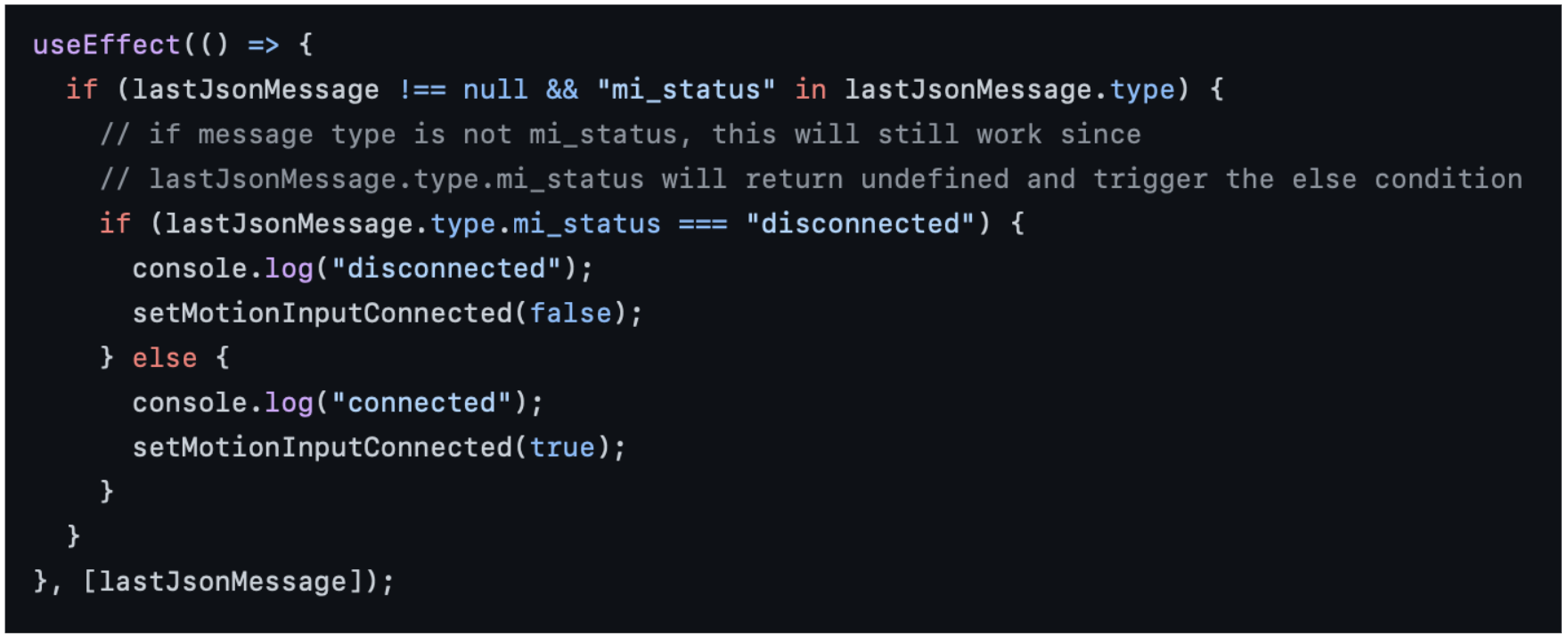

The core function of the intermediary server is to act as a relay server and pass messages along. The senders on the frontend and backend can send JSON-encoded messages to the intermediary server, while the receivers on the frontend and backend can then do as they please with the data received. The JSON messages follow a stateless design, where messages are interpreted independently of each other. This allows for high message throughput without worrying about dropped messages. The JSON schema is also simple and defines several message types such as “mi_status”, “mi_update”, and “mi_config”. Upon receiving the JSON-encoded messages, the receivers on either the frontend or backend can unpack the data based on the type of message and perform any changes accordingly.

For example, in the example below, when the WebExtension frontend receives a message with the type “mi_status”, it updates the connection status info element on the frontend.