How do you use kiosk software?

We extensively use kiosk software to expedite the food ordering process for our customers. It has significantly helped us reduce waiting times.

What sort of functionality should kiosk software have?

Primarily, it should be designed for touch input, i.e., have large, clickable elements. To that end, we strictly follow a grid-based layout for simplicity and ease-of-access to elements. It should also allow for linking between elements and be able to perform actions such as adding items to the cart, applying vouchers, etc.

What do you mean when you refer to the term "elements"?

Elements include things like menu items, text boxes, image placeholders, navigation bars, etc. I would expect kiosk software to be able to add different types of elements to fulfil all our use cases and connect these elements together for an interactive experience.

What difficulties do you find with current kiosk software development tools?

Apart from the cost, a major difficulty is predicting what the final product will look like. I would love to be able to specify the exact dimensions and see an approximate preview of how the UI would look on actual kiosk hardware. It would also be nice if this preview could simulate interactions between the elements.

Do you think you are able to cater to all your users with the current kiosk software tools?

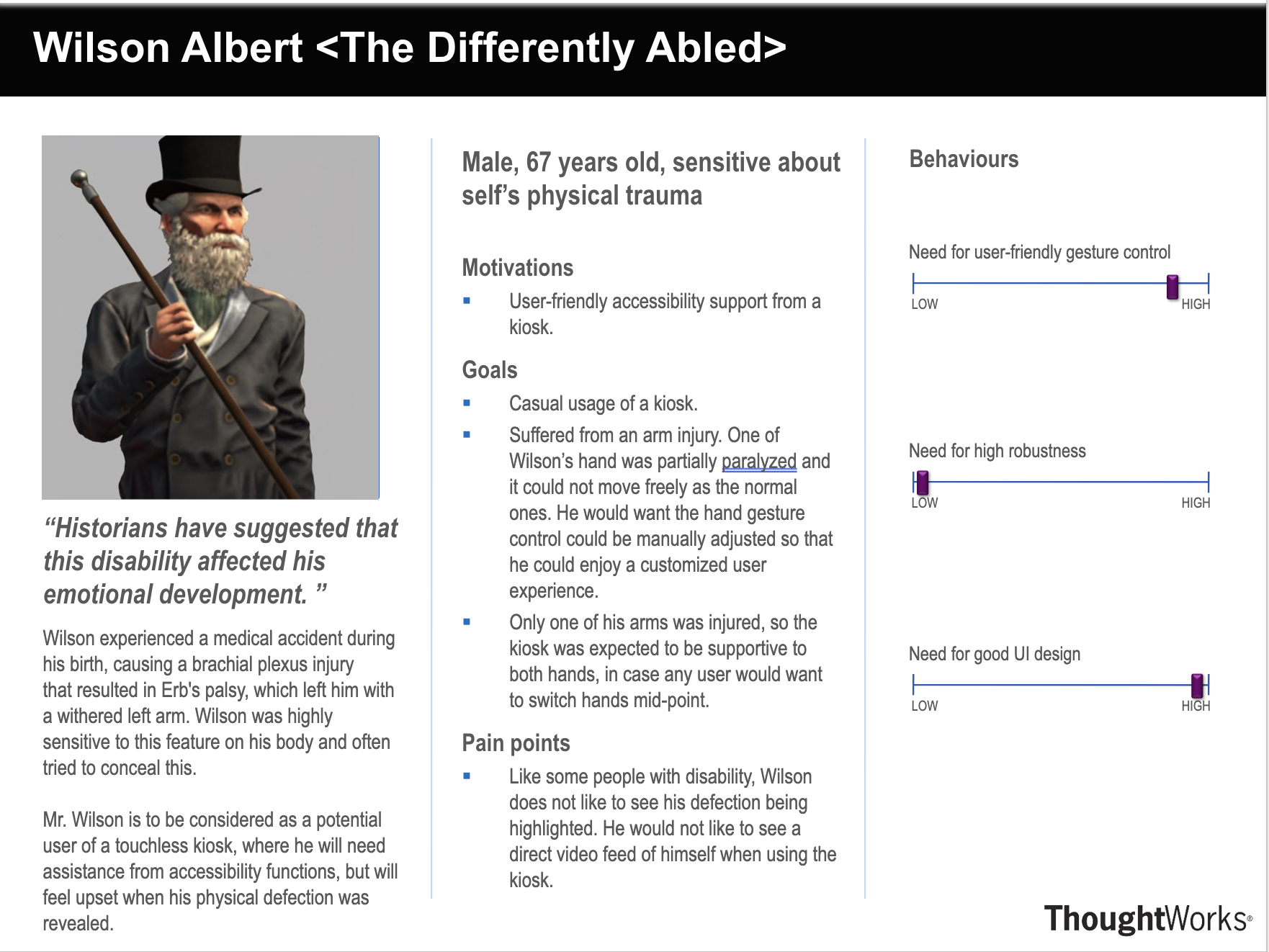

Definitely not. Kiosk software is severely lacking when it comes to catering to disabilities. Things like text-to-speech and color-blind options do exist and are nice to have. However, a lot more features could be implemented, such as zoom and text scaling for people with vision impairments.

We are currently working on software that can replace conventional touch input with a wide range of gestures (facial, hand, eye, body, etc.). Would you be interested in such technology?

Absolutely, I think that sounds like a fantastic idea. If you could create a kiosk development tool that would be able to integrate these gestures, I think we would be able to better fulfil the needs of our customers with disabilities. It would be even better if the additional hardware required was minimal and not too expensive to add.