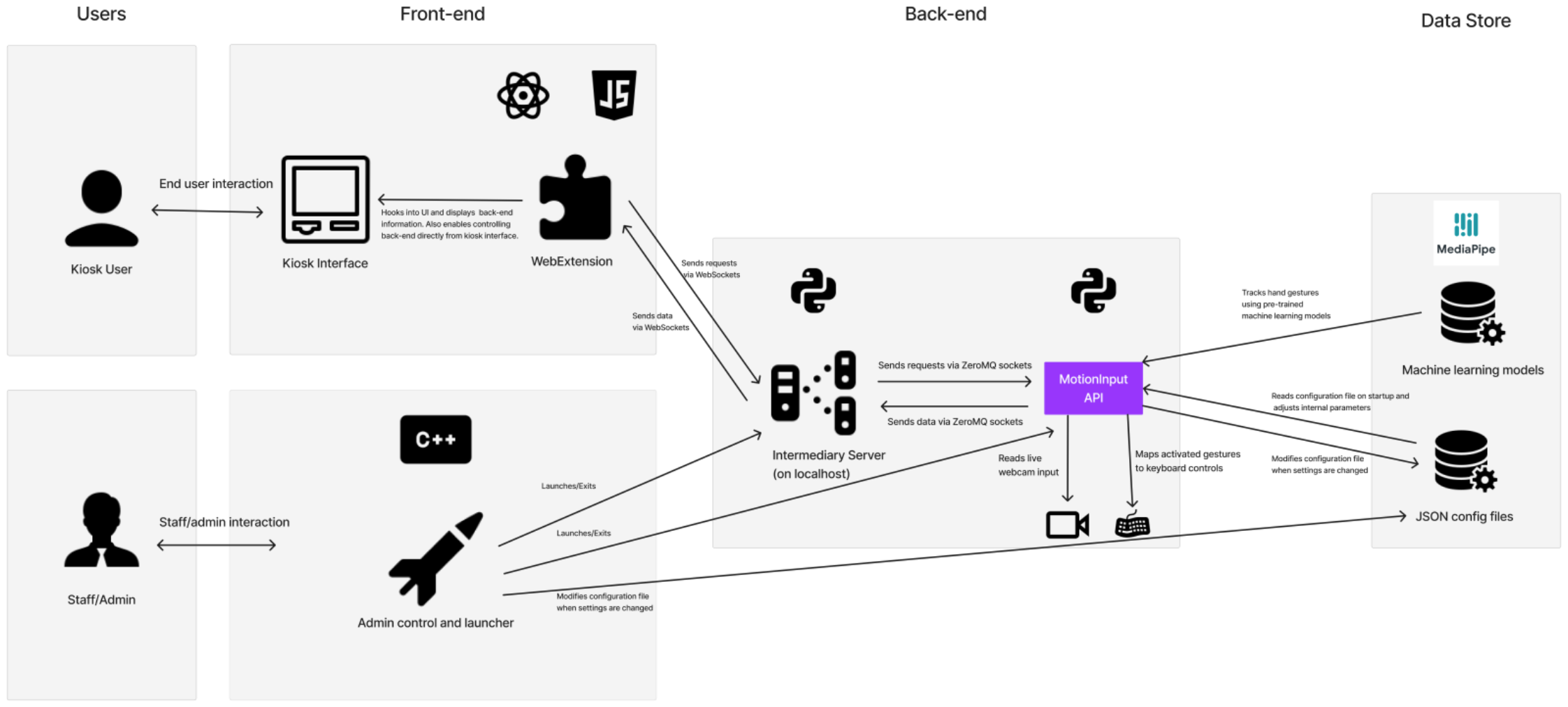

Based on our personas and requirements gathering, we have identified two distinct types of users: staff/admin and the general public.

The general public interacts with kiosk interfaces directly, and we provide them with a WebExtension front-end built with React and JavaScript. This front-end can be hooked into any pre-existing kiosk interface as a sidebar to display essential information from the backend. This includes a live preview of the user’s hand, making it easier to perform gestures, live swipe detection boundaries, gesture sensitivity settings, and general status information.

For staff/admin, we provide an admin control panel front-end built using the Microsoft Foundation Class library in C++. This control panel sets the initial configuration settings of the program, such as gesture sensitivity, camera selection, hand detection, crowd rejection confidence, and more. Staff/admin can easily launch or exit the backend processes with the click of a button.